Hey Pinkies 🦩, did you know approximately 50% of traffic to your website comes from probes or bad actors 🤖? I operate a SaaS w/ ~600 websites, so I see crazy traffic all the time.

In my SaaS, I just created a new rule to ban actors who modify the subdomain and use local DNS. I caught 500+ IPs in the last 4 hours.

It got me thinking: we could crowd-source a firewall. I could open-source the IPs for bad actors, and a package would allow you to protect your website and share bad IPs to the pool.

I'd love to hear how you guys deal with this, and to learn what you guys think about crowd sourcing something like this.

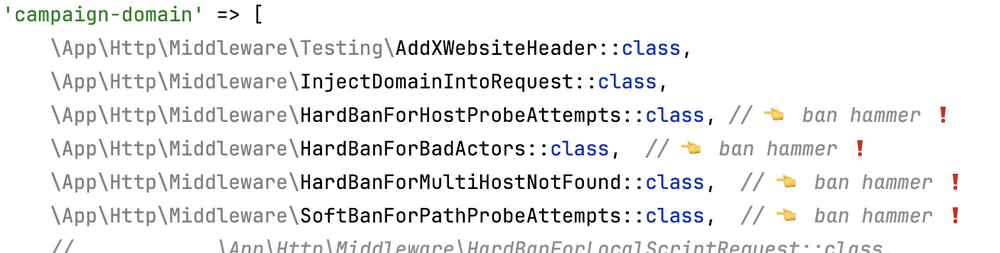

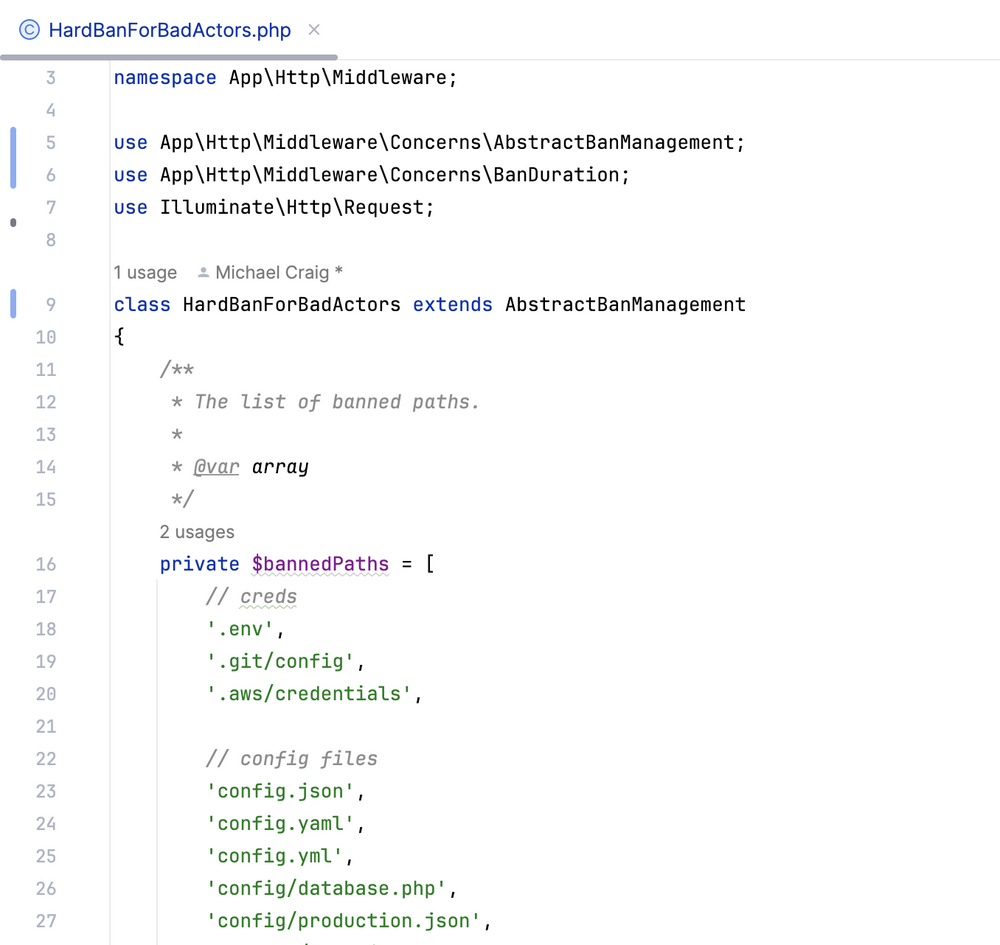

Attaching some screenshots to show you what I see, and give you an idea of the things I scan for before laying down the ban hammer.

Yes, via the middleware. Right now I'm caching my offenders:

gist.github.com/MikeCraig418/573d92ed7cc8d793cfc125d3eacfa09f

I wonder if there is an existing list of IPs (dataset) that I can incorporate for an additional level of filtering.

We're going live with @ozdemiru in 30 minutes. Feel free to drop by and say hello.

www.twitch.tv/make_dev

Thanks again @ozdemiru, that was a lot of fun. It was a pleasure seeing what you built today!

Hey Pinkies!

Come hang out with @ozdemiru and me TOMORROW at 12:00PM PST.

We're live streaming, talking tech, and showing off.

www.twitch.tv/make_dev

Hey Scrapers!

Can anyone recommend roach-php.dev over www.crwlr.software

Btw: I've used dusk for scraping before. I have a small project that requires Scraping and the appeal of both packages above is perhaps I can deploy on Vapor for some serverless action... so really, it's me being ~~lazy~~ efficient with DevOps.

Hey Pinkies! No live stream today. We will pick up again next week at 12pm PST!